As part of both a side project and a work project I recently had a couple of larger datasets that I needed to put into a database and be able to search them. I had previously used Azure Blob Storage but hadn’t done too much with Azure Table Storage. Naturally I needed to use PowerShell to perform this and I quickly found out that the AzureRM PowerShell Module could do the basics, but it wasn’t going to scale to the size of the datasets I had. Some trial and effort later I got it to do want I needed and then using the Azure Table Service REST API I was able to easily query the dataset.

Note: Initially I performed an initial load of one of the datasets (~35k rows), a row at a time which took close to 5 hours. Once I got Batch Operations for dataset insertion into Table Storage working I got that down to ~4 minutes. This post details how I did it.

Prerequisites

You will need;

- the Azure Storage Explorer which you can get from here

- An Azure Storage Account

- you can create one though many different methods such as the Azure Portal UI, Azure Portal CLI, Azure CLI, PowerShell …..

- PowerShell 5.1 or later and the AzureRM PowerShell Module

Creating an Azure Table Storage Table

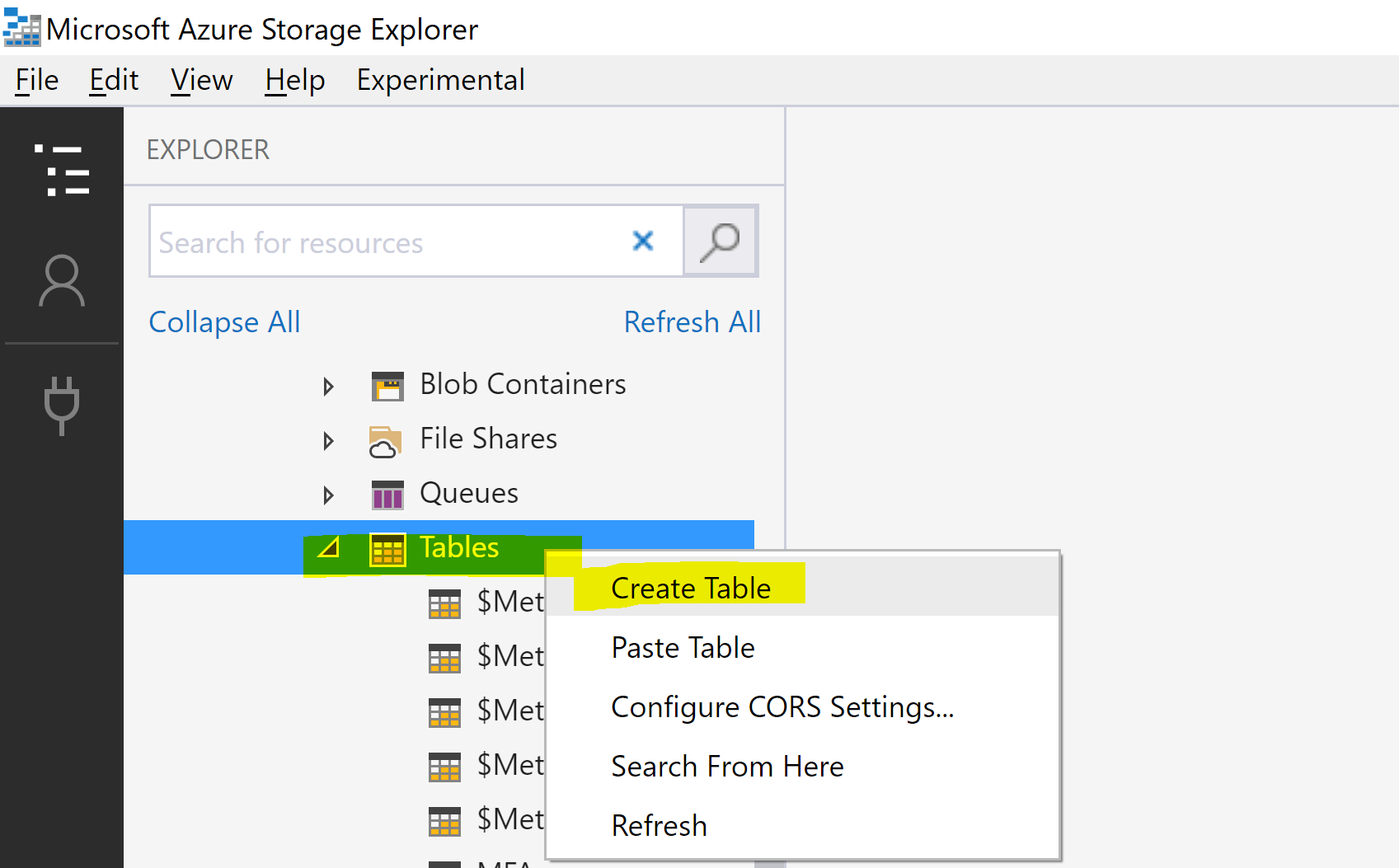

Using the Azure Storage Explorer, authenticate to Azure and navigate to your Storage Account. Expand the Storage Account, select Tables and right-click and select Create Table. Give the Table a name and hit enter. Whilst you can also create the Table via the PowerShell AzureRM Module or the Azure CLI, it is also super quick and easy using the Azure Storage Explorer which will be used later to verify the loaded dataset.

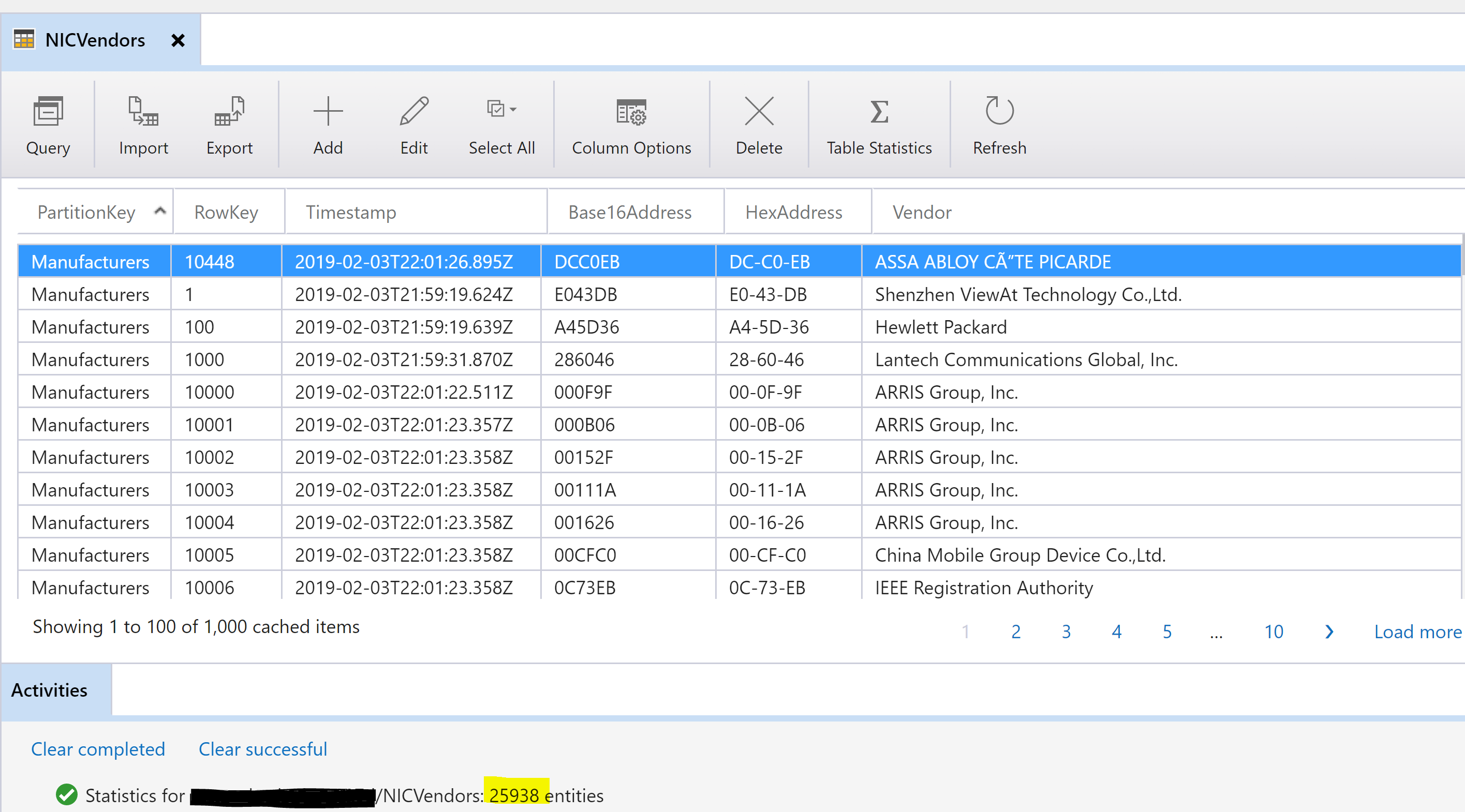

Using the Azure Storage Explorer I created a Table named NICVendors.

Loading Data into Azure Table Service

The example data I will use here is the dataset from a post last year for MAC Address Vendors lookup. Rather than exporting to XML I will load it into Azure Table Storage. The following script will obtain the Vendors list from here and save to your local disk. This will provide ~26k entries and is a good test for loading into Azure Table Service.

Update Line 3 for where you want to output the file too.

With the dataset in memory we can parse it and insert each row into the table. The quickest method is to batch the inserts. The maximum number of rows allowed in a batch is 100. Each row must also be using the same Primary Key.

Update:

- Line 2 for your Azure Subscription

- Line 3 for the Resource Groups your Storage Account is located in

- Line 4 for the name of your Storage Account

- Line 5 for the name of the Table you created

- Line 6 for the name of the Partition for the dataset

With my Storage Account being in Central US and myself in Sydney Australia loading the ~26k entries took 4 mins 27 seconds to insert.

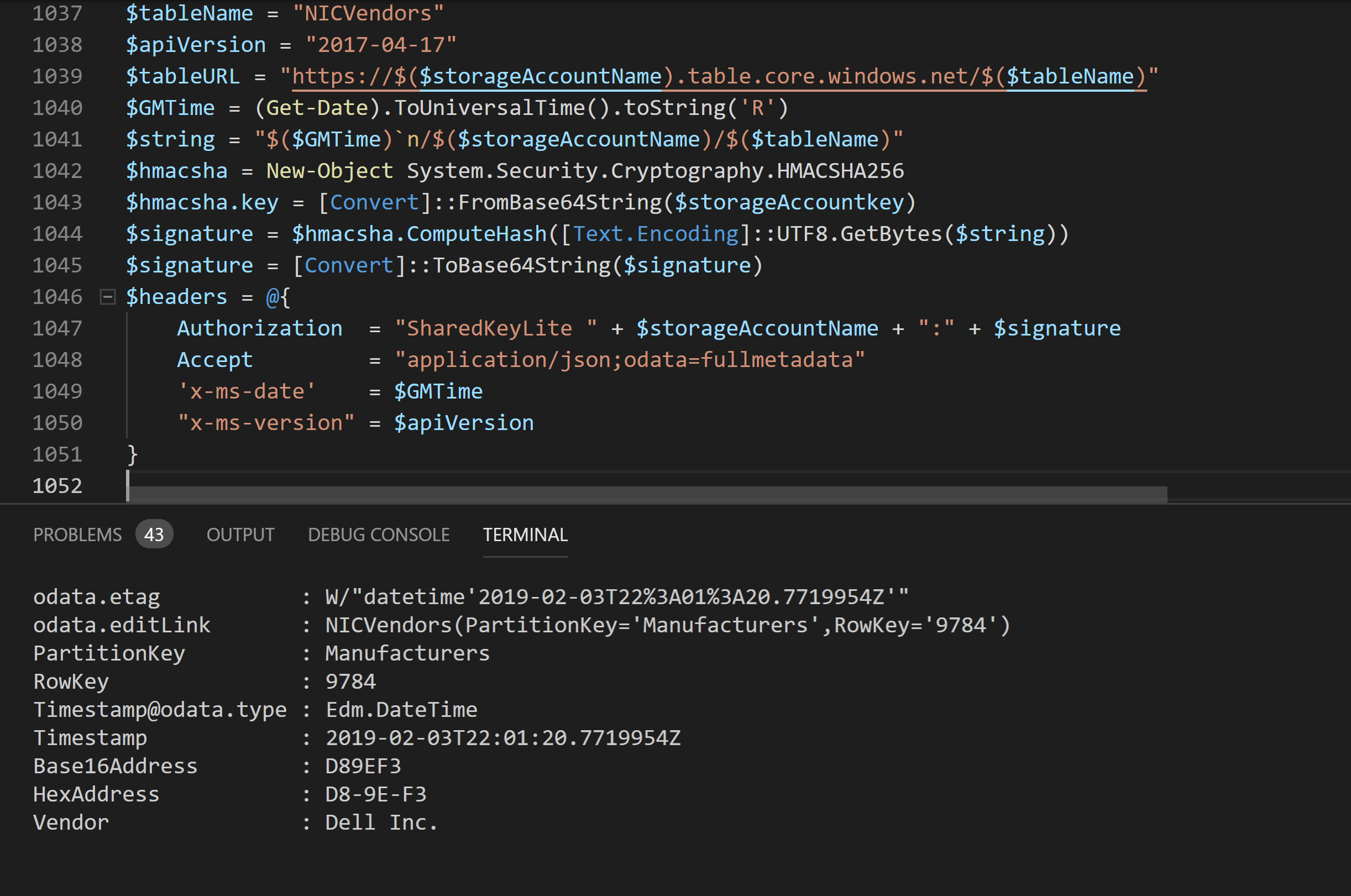

Querying Azure Table Service using the RestAPI and PowerShell

To then query the Table entries to find results for a Vendor the following script can be used. Change;

- Line 2 for your Storage Account name

- Line 3 for your Storage Account Key (which you obtain from the Azure Portal for your Storage Account)

- Line 4 for your Table name

- Line 20 for the Vendor to query for

Executing the script to query for Dell Inc. returns 113 entries. The graphic below shows one.

Summary

Using the AzureRM PowerShell Module and the TableBatchOperation class from the Microsoft.WindowsAzure.Storage.dll we are able to batch the record inserts into 100 row batches.

Using the Azure Table Service REST API we are able to quickly search the Table for the records we are looking for.