As I mentioned in a recent post I’ve recently dived back into Microsoft Identity Manager. The focus of this post is some development I recently did to build a Microsoft Dynamics 365 Finance & Operations Management Agent for Microsoft Identity Manager.… keep reading

Using the new Granfeldt FIM/MIM PowerShell Management Features

Last week Søren Granfeldt released the first update to his hugely popular Granfeldt FIM/MIM PowerShell Management in over 2 years. This post looks at the latest release and using the new Granfeldt FIM/MIM PowerShell Management Features.

The new features are:

- A new option to specify an auxiliary set of credentials that is passed to scripts.

Sending Granfeldt PowerShell Management Agent Events to the Windows Application Event Log

It has been a while since I wrote a Microsoft Identity Manager or even a Granfeldt PowerShell Management Agent related post. Primarily because it has been quite some time since I have done any development for MIM. The last few weeks though I have, and I wanted to output PowerShell Management Agent Events to the Windows Application Event Log.… keep reading

ChatOps for Microsoft Identity Manager

Jan 28, 2020 Also see ChatOps for Azure Active Directory

A Bot or ChatOps for Microsoft Identity Manager is something I’ve had in the back of my mind for just over two years. More recently last year I did build the Voice Assistant for Microsoft Identity Manager as a submission for an IoT Hackathon.… keep reading

Microsoft Identity Manager Sync Server HResult 0x80040E14 Error

Spoiler / TL DR; The Microsoft Identity Manager Sync Server HResult 0x80040E14 Error is associated with lack of available resources in your Microsoft Identity Manager environment.

Sizing servers for a Microsoft Identity Manager implementation gets easier the more you do it.… keep reading

Transaction Deadlocked on Microsoft Identity Manager MA Export

In a Microsoft Identity Manager development environment, I had just defined a series of rules and was keen to export a large number of users to the MIM Service. I was pretty confident that I’d done everything correct however it was clear very quickly that something was wrong.… keep reading

Cannot load Windows PowerShell snap-in MIIS.MA.Config on Microsoft Identity Manager 2016 SP1

On a Microsoft Identity Manager 2016 SP1 Server running the Add-PSSnapin MIIS.MA.Config PowerShell snap-in throws the error

Add-PSSnapin : Cannot load Windows PowerShell snap-in MIIS.MA.Config because of the following error: The Windows PowerShell snap-in module C:\Program Files\Microsoft Forefront Identity Manager\2010\Synchronization Service\UIShell\Microsoft.DirectoryServices.MetadirectoryServices.Config.dll… keep reading

An Azure MFA Management Agent for User MFA Reporting using Microsoft Identity Manager

Microsoft as part of the uplift in Authentication Methods capability have extended the Graph API to contain User Azure MFA information. My customers have been requesting MFA User Reporting data for some time. How many users are registered for Azure MFA?… keep reading

Automated Microsoft Identity Manager Configuration Backups & Documentation to Azure

Two and half years ago I wrote this post on creating an Azure Function to trigger the process of Automating Microsoft Identity Manager Configuration backups. The Azure Function piece was a little obtuse. I was using it, as it was the “new thing” and it was my new hammer.… keep reading

Microsoft Identity Manager Graph Connector stopped-extensible-extension-error

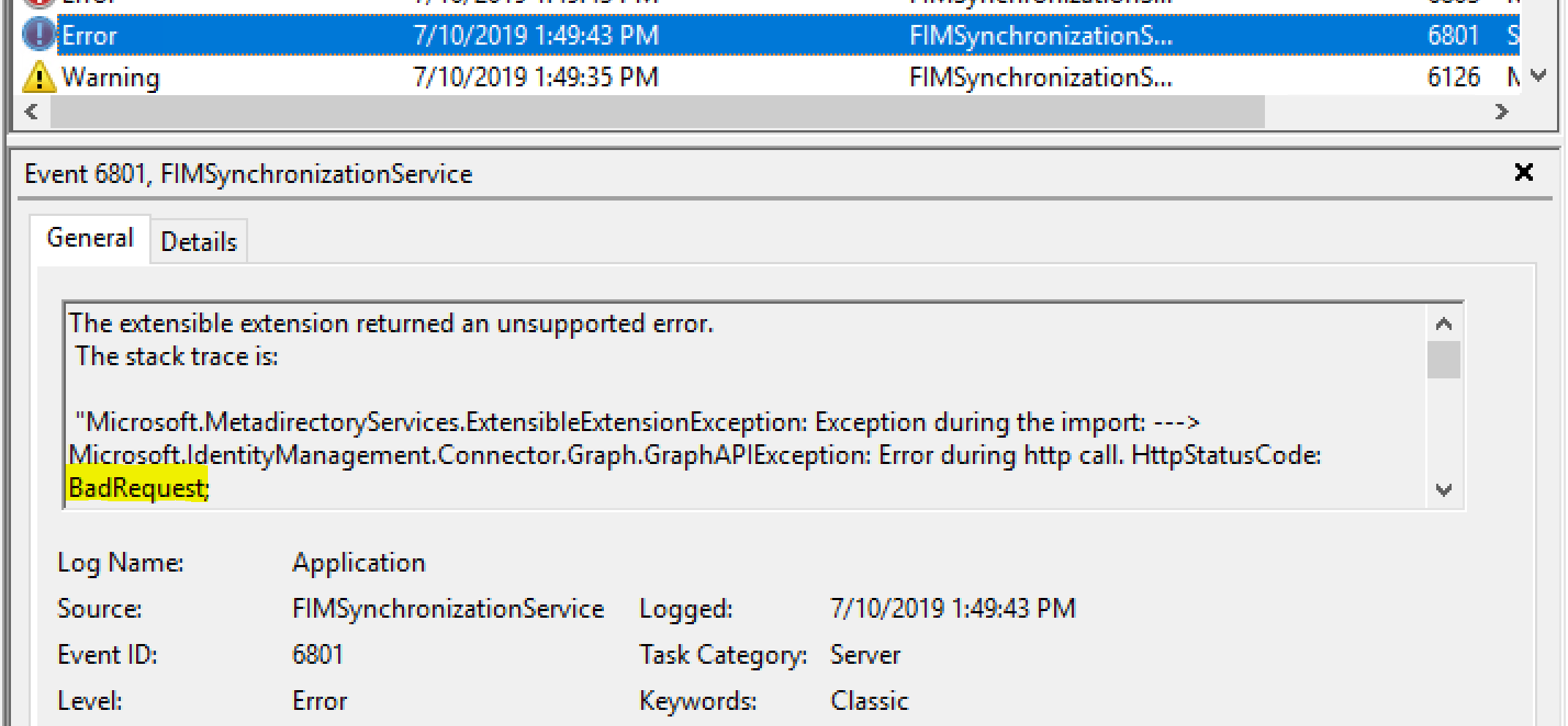

Running a Delta Import on the Microsoft Identity Manager Graph Connector returns stopped-extensible-extension-error .

Looking into the Application Event Log we initially see BadRequest.

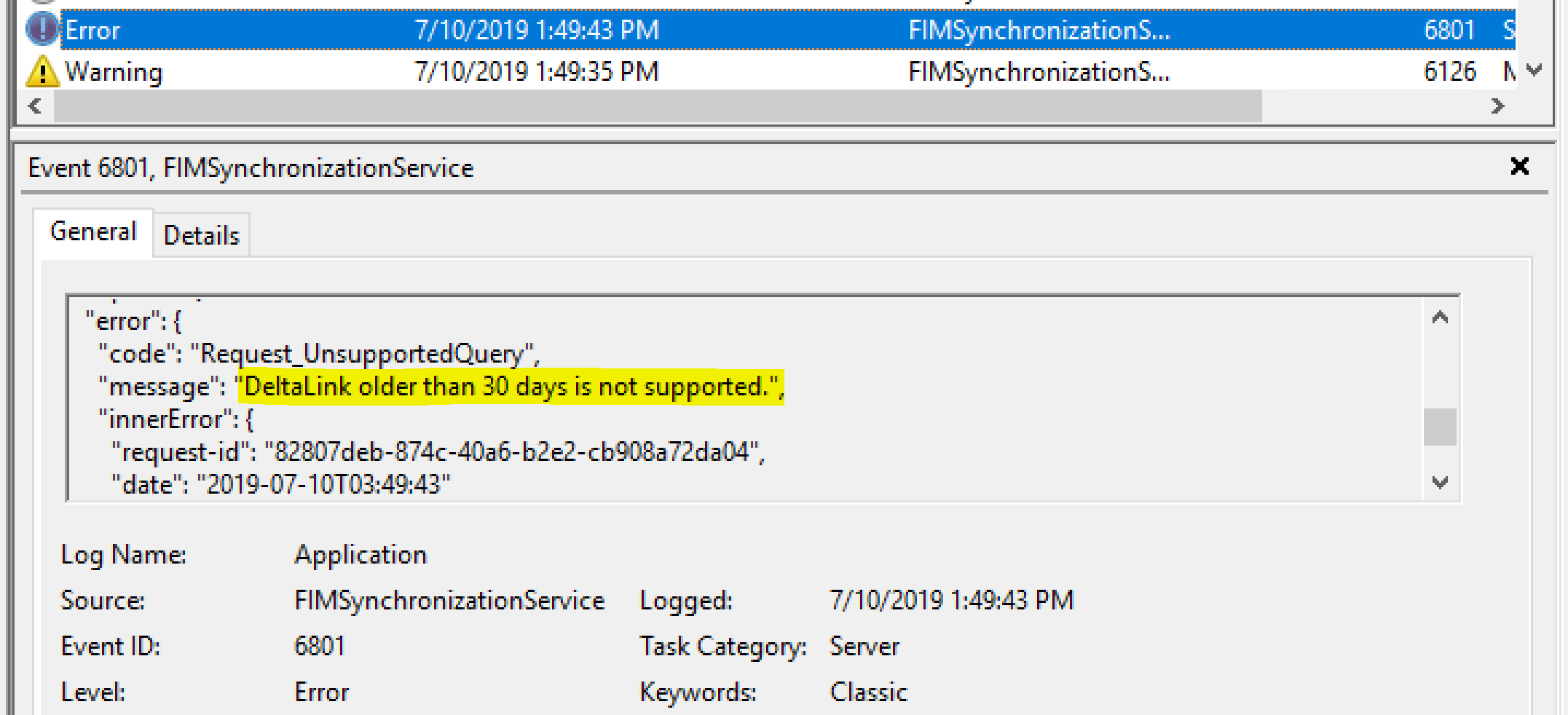

Digging deeper we find DeltaLink older than 30 days is not supported.

In this particular case the Microsoft Graph Connector for Microsoft Identity Manager has not run in over 30 days and the Differential Query DeltaLink cookie that I detailed in this post and this post has expired.… keep reading